Gathering is Aaron Wong-Ellis, Anthony Furia, Hugo Flammin, Isaac Strang, Kevin Meric and Nuff (that's me!). On this project, Aaron was the software architect and project lead. Anthony, Kevin and I designed the installation experience.

You can try Biometric Bias here: https://dw0mwjp4zbq7.cloudfront.net/

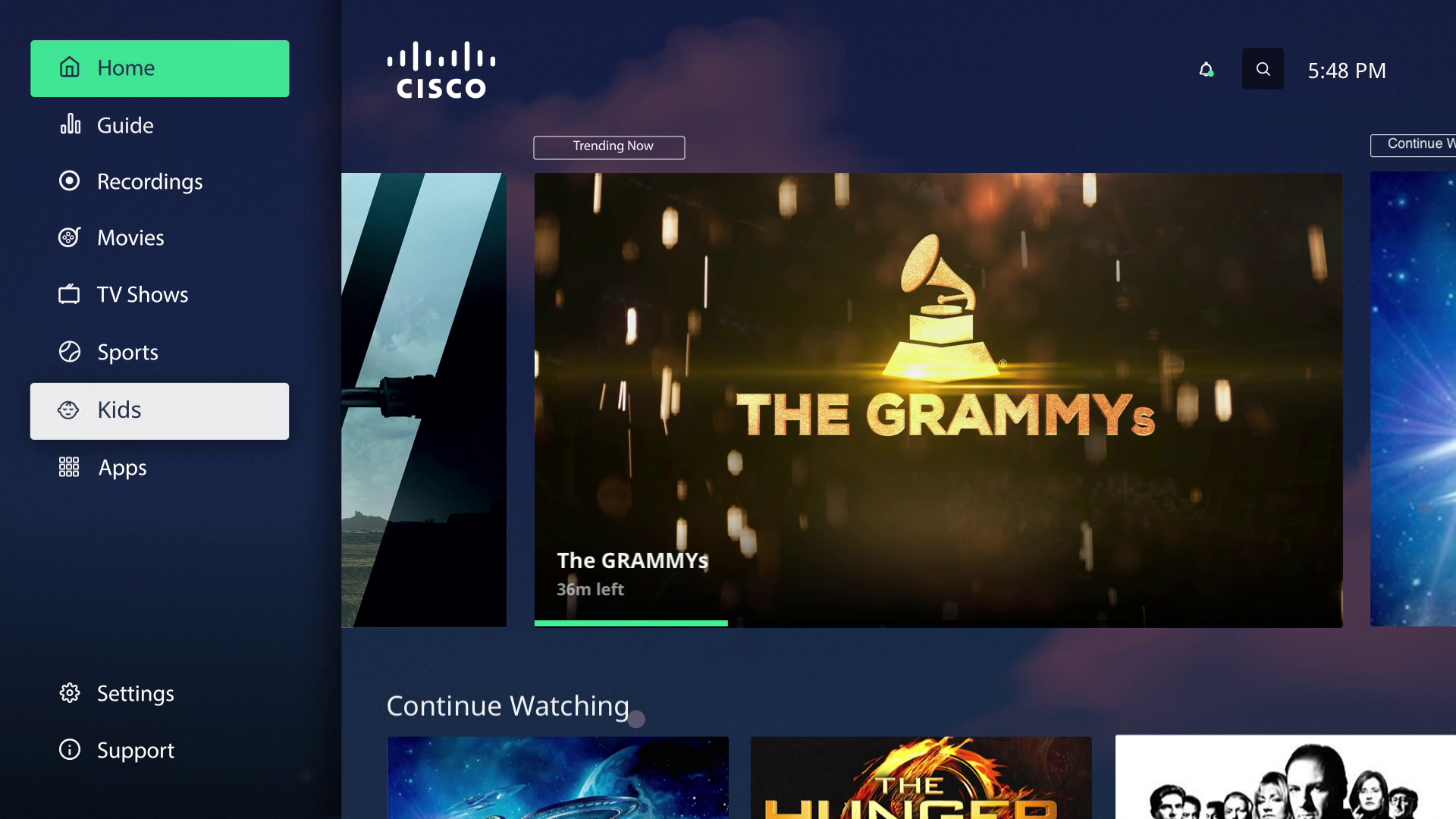

Early kiosk design (Kevin Meric)

As more decisions are being made by algorithms, we thought it was important to spark a conversation about whose ideas these systems inherit and what that might imply.

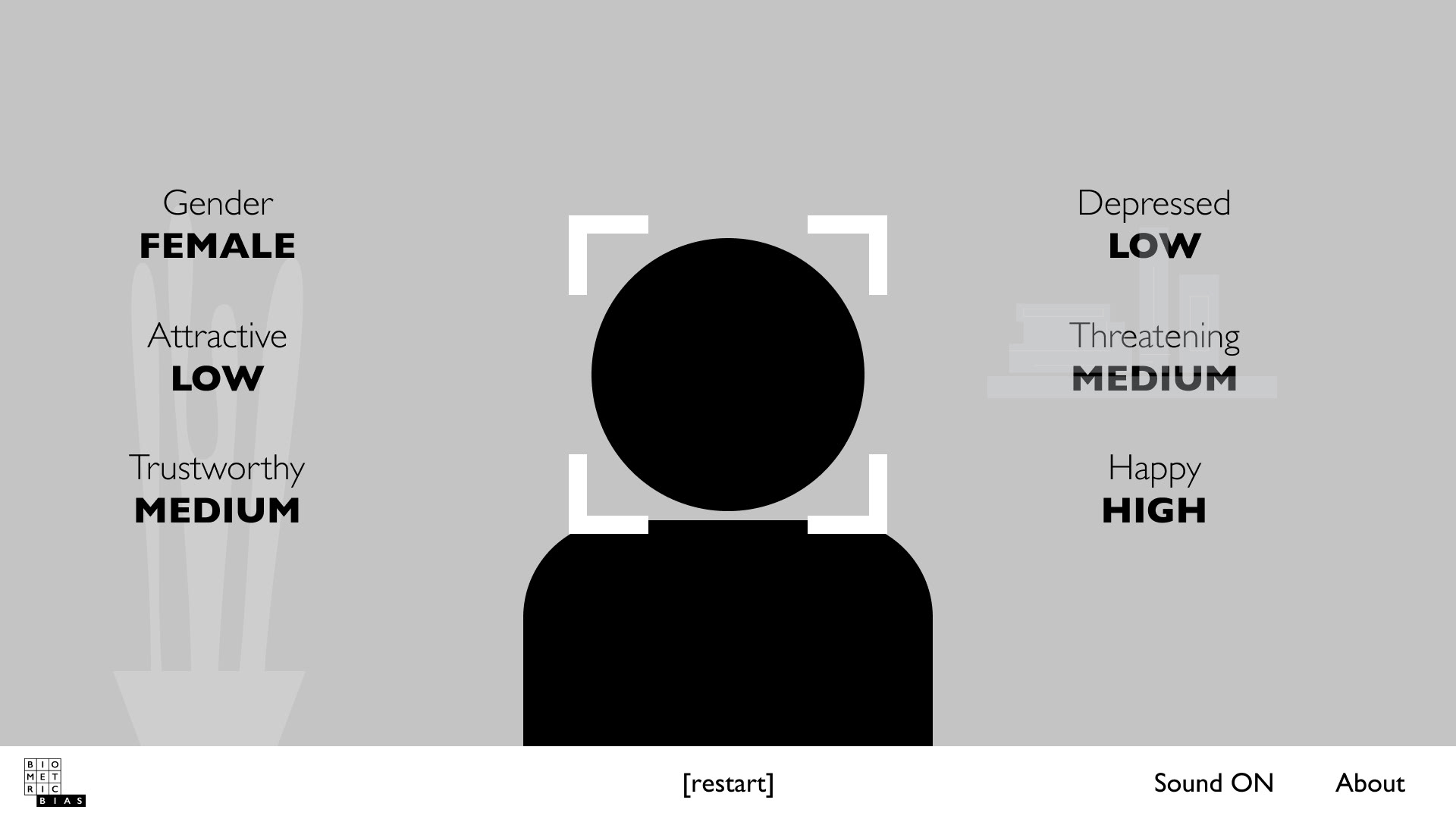

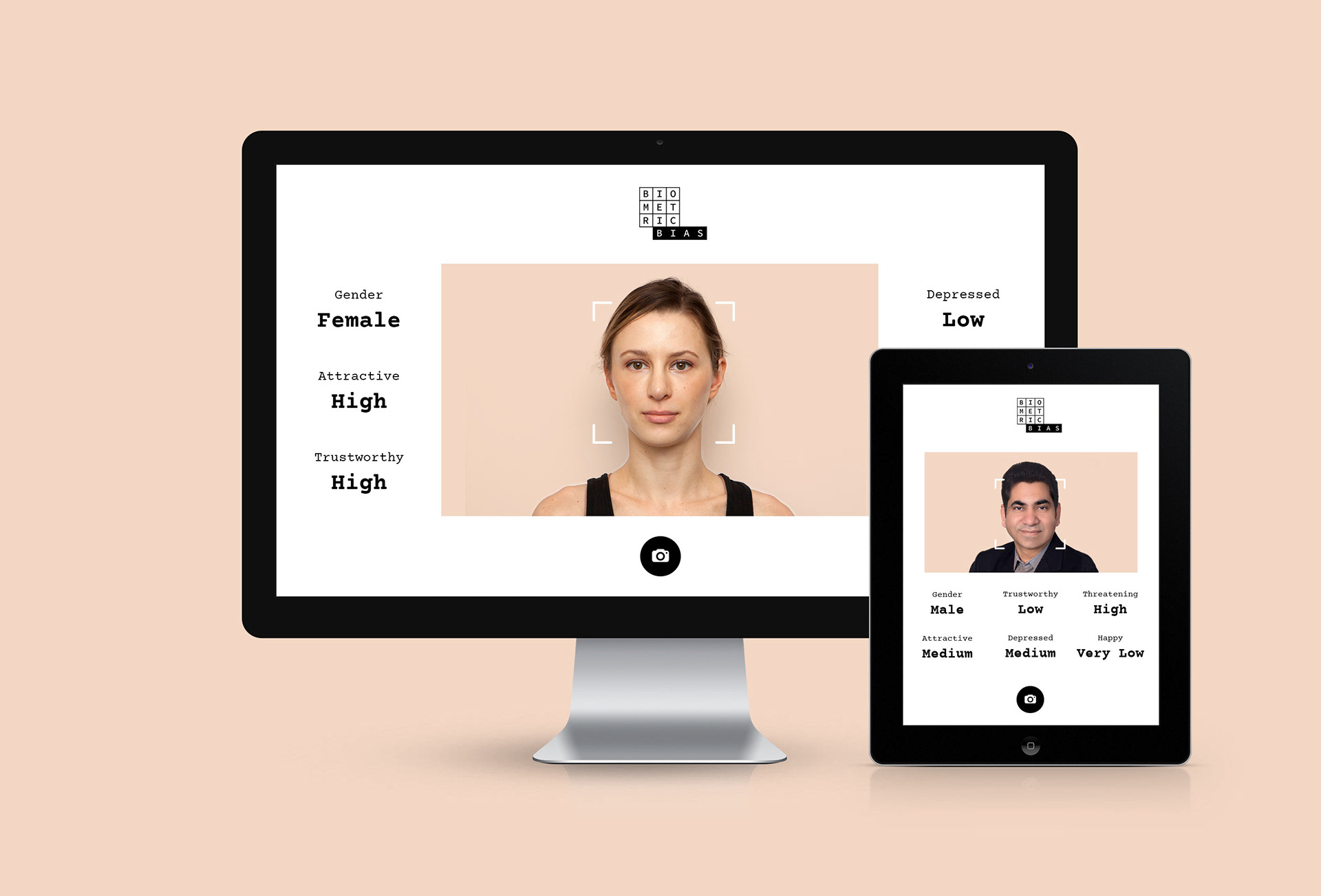

We trained an algorithm on the Chicago Face Database—a research dataset containing hundreds of photographs of faces rated by over a thousand participants—to analyse people’s faces on six parameters: gender, attractiveness, happiness, trustworthiness, threat and depression. We set up stations that would allow up to four people at once to “evaluate” themselves on individual screens.

We showed Biometric Bias at Ontario Science Centre's Tech Art Fair. Over 15,000 people had the chance to try Biometric Bias, respond, and discuss with us and each other.

How (We Think) It Works

While neural networks are notoriously a “black box”, we can infer the algorithm is, broadly speaking, indexing proportions. For example, the distance between eye pupils relative to the width of the mouth. Of course, there's a name for the pseudoscience of correlating physical features to personality traits.

Visuals

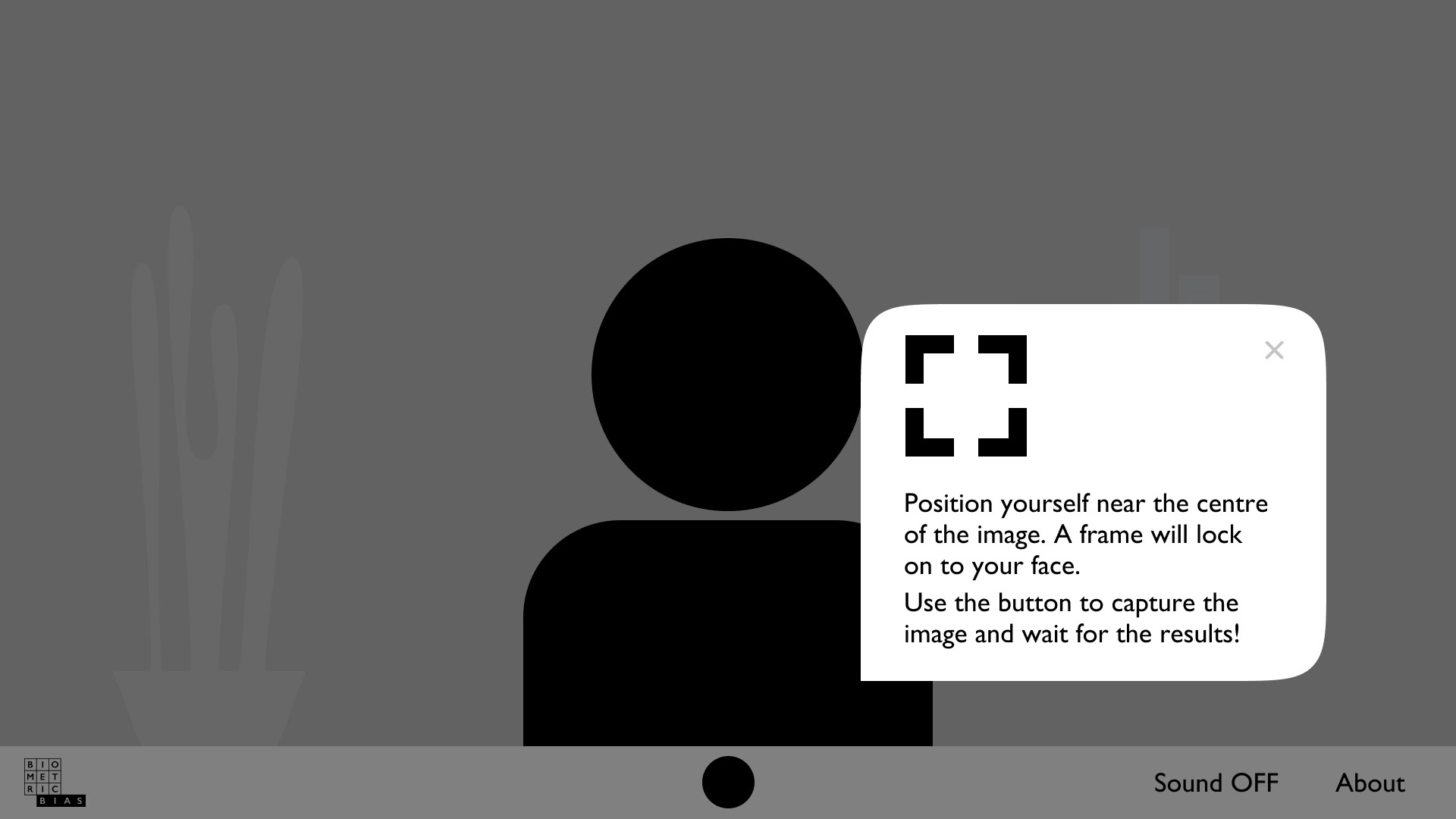

We kept things sparse with no colour for expediency and to keep the focus on the camera feed. The logo mimics a convolutional neural network, which scans images in 3x3 grids. The word "bias" intentionally throws the rest of the mark off-centre, introducing a literal bias.

Anthony suggested Gill Sans, used in 2001: A Space Odyssey—a film which questions the neutrality of Artificial Intelligence. I paired that with the techie vibes of Source Code Pro.

We rented iPads and stands to display our installation in a super professional manner but it turns out the iPads were a generation too old to run the software. We ended up having to run the installation on our personal machines.I've learned the hard way that if thousands of children use your keyboard, you will get ill shortly thereafter.

We spoke to every person that tried Biometric Bias before and after they used it, to give them some context and hear what they thought of the experience.

Observations

A few interesting patterns emerged from our time showcasing this piece:

1. By and large, people seemed concerned with “getting good scores” and would get upset when misgendered or rated as depressed or unattractive, despite being warned the algorithm would be biased.

2. Young children were overwhelmingly judged as female

3. People with lighter hair tended to be rated as more attractive.

4. It is possible to influence the evaluation with a change in facial expression, but lighting seemed to move the needle far more.

It's important to remember we are not experts in either facial recognition nor AI, and ta this is an art project. We imagine a more sophisticated algorithm would produce different results even with the same training data. We also know algorithms like this are usually fed much larger datasets.

Running a demo in my studio at MOCA Toronto

What's Next?

Inspired by projects like One Shared House 2030, I would like to push the aesthetic, narrative, education and experiential components of Biometric Bias even further. I think the project has potential both as an online, from-the-comfort-of-your-home experience and a site-specific installation. I am (slowly) mapping out the experience for future improvements.

We were approached in 2021 to tentatively participate in an episode of CBC's The Nature of Things dealing with algorithmic bias, but this would require finding the time and funding to further develop our project.